Best Practices for Designing an Efficient Machine Learning Pipeline

Machine learning pipelines have become indispensable for enterprises seeking to deploy accurate and reliable AI systems at scale. By standardizing, automating, and streamlining repetitive tasks, well-architected pipelines boost efficiency, reproducibility, and collaboration across teams.

However, for many companies, pipelines introduce bottlenecks that slow the pace of innovation. Model development cycles drag on for months as data scientists manually wrangle data and iterate on models. Engineering resources struggle to integrate new solutions into live systems.

To stay competitive in today's fast-moving business environment, companies need pipelines that facilitate rapid experimentation and deployment cycles measured in weeks, not months. This requires optimizing pipelines for speed and flexibility at each stage without compromising on quality or governance.

In this article, we'll discuss best practices for designing and optimizing machine learning pipelines to get models to end users significantly faster through the following techniques:

-

Implementing modular, configurable components

-

Automating repetitive tasks

-

Engineering for scalability from the start

-

Streamlining model evaluation and selection

-

Adopting continuous integration/deployment practices

-

Monitoring models post-deployment

By applying these strategies, companies can both accelerate innovation and continuously refine AI solutions to maximize their real-world impact.

Implementing modular, configurable components

Flexibility through Modularity

When designing a machine learning pipeline, it is important to take flexibility into consideration so the pipeline can accommodate changes over time. One effective approach is to develop the pipeline using modular and configurable components.

Each individual step or process in the pipeline should be treated as an independent, standalone unit that can be separated from the other parts. This means defining clear interfaces between components so one section can be developed or altered without affecting the others. The components should not be tightly coupled and reliant on each other.

For example, the data preprocessing step could be a self-contained module that cleanses, transforms, and formats the raw input data. It would have well-defined inputs for the original data and outputs for the cleaned data. The preprocessing logic could then be updated or swapped out without disrupting model training or evaluation modules downstream.

Adding Configurability

In addition to modularity, the pipeline components also need to be configurable. This allows each part of the process to be adjusted based on the particular project's needs and environment. Instead of being rigid, components are designed to accept configurable parameters.

The data preprocessing module, for instance, may have configurable options for which transformations to apply, like excluding certain features, capping outlier values, or normalizing data types. These settings could be modified per dataset without changing any code. Similarly, the model training component accepts hyperparameters that control aspects of model fitting like the learning rate, number of epochs, or type of optimization algorithm.

Configuration options provide an important level of flexibility and customization. They empower users to tailor each pipeline building block to fulfill customized requirements. For example, a deployment environment with limited computational resources may choose a simpler model and smaller training parameters compared to one with massive parallelism. Or a time-sensitive project may prioritize a faster training regimen over maximizing accuracy. Configurable components accommodate variants like these.

Implementing modularity and configurability requires some initial design effort but provides numerous long-term benefits. It helps ensure each piece of the pipeline can be comprehended, tested, and altered in isolation without disrupting dependencies. New models, data types, or target systems can be integrated by combining appropriate modules, avoiding complete rewrites.

Maintainability is improved since pipeline sections no longer require simultaneous changes. Modular tests and updates can now be implemented incrementally. Fault tracing likewise becomes simpler when problems can be pinpointed to specific self-contained modules. Engineers gain flexibility to experiment more aggressively without risking destabilization.

Overall, this structure encourages code and process reuse. Successful components develop a proven track record and gain extensive testing, then serve as reusable building blocks for future related pipelines. Standardization is increased while development velocity and innovation are accelerated.

Automating repetitive tasks

The Need for Automation in Machine Learning

Automating repetitive tasks is a crucial part of optimizing any machine learning pipeline for faster and more efficient model deployment. When deploying machine learning models, data scientists commonly spend the majority of their time on procedural tasks like data preprocessing, feature engineering, model training and evaluation. While necessary, these repetitive steps offer little opportunity for innovation if performed manually. To truly accelerate the entire machine learning workflow from model development through deployment, it is important to automate as many of these repetitive tasks as possible.

Automation ensures consistency and removes the potential for human error each time one of these procedural steps is performed. It allows tasks like data cleaning, transformation and feature selection to be standardized and reproduced identically on each iteration. This consistency is key for model optimization and gaining valuable insights from experimental results. Manual processes risk introducing inconsistent practices or unintended variability that can undermine model performance. Automating standardized procedures through a pipeline guarantees repeatability that is difficult to achieve when relying on human operators.

Automation also provides major efficiency gains by reducing the time spent on repetitive manual work. For data scientists, this frees up time to focus on more strategic work like algorithm selection, innovative feature engineering and model architecture design. When procedural steps are automated, models can be retrained and redeployed much more rapidly to take advantage of new data. This accelerates the entire machine learning lifecycle from months to hours or minutes, allowing for far more experimentation.

Automation Tools

Tools like Apache Airflow and AWS Step Functions offer configurable frameworks to codify an entire machine learning workflow from start to finish. Using such an orchestration tool, each step in the pipeline from data ingestion to model monitoring can be automated and chained together programmatically. This “hands free” operation allows the pipeline to run continuously in the background without human intervention beyond the initial setup. Failure handling, retries, and other protections can be built-in to ensure reliable execution.

Reducing Manual Intervention

With an automated machine learning pipeline powered by task orchestration tools, data scientists are unshackled from procedural programming. They regain time previously spent on repetitive manual work and can reallocate those hours to more strategic model development activities. Automation guarantees consistency between iterations of the pipeline and accelerates the overall workflow. Most importantly, it allows models to be retrained continuously on new data with minimal human effort through a fully automated and reliable process. This closes the loop between model building, deployment and ongoing optimization - fundamental for maximizing the real-world impact of machine learning applications.

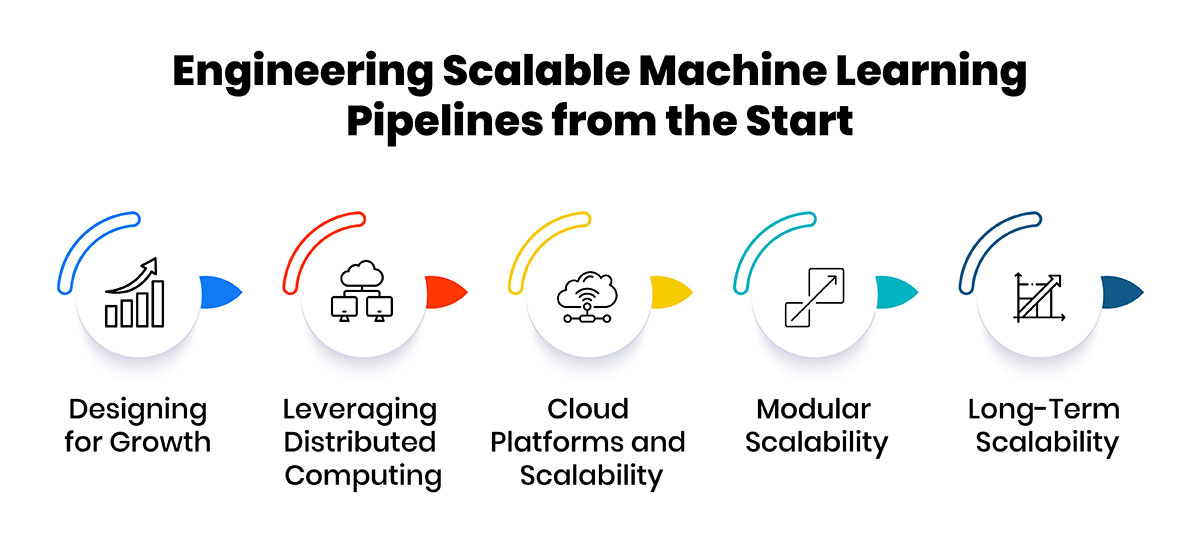

Engineering for scalability from the start

Designing for Growth

When first constructing a machine learning pipeline, organizations must engineer it with scalability as a core consideration. As data volumes and model complexities inevitably grow over time, the pipeline will need to efficiently process much larger amounts of information and more sophisticated algorithms. To enable this, the pipeline architecture should be designed with scalability in mind from the ground up.

Leveraging Distributed Computing

Distributed computing techniques can allow the pipeline to leverage additional resources as needs increase. For example, Apache Spark may be used to split processing jobs across clusters of many computers working in parallel. This distributed approach ensures the pipeline can seamlessly take advantage of more machines without reengineering. Model training steps within the pipeline could also be parallelized to achieve further performance gains as models become more data-hungry over time.

Cloud Platforms and Scalability

Infrastructure like cloud platforms provides scalable hosting that enables pipelines to auto-scale their underlying resources up or down dynamically based on workload. Starting on infrastructure like this means the pipeline can start small and cost-effectively while having the flexibility to quickly scale out to thousands of nodes in the future as requirements grow.

Modular Scalability

Moreover, the individual components that make up the pipeline, such as data processing or model evaluation, should be modularized. This modular design supports scaling each piece independently. For instance, the data processing module could distribute work among many more workers, while the model training relies on GPU clusters to accelerate growth.

From the beginning, pipelines must measure performance against best-in-class scalability expectations. Benchmarks help validate that the system can maintain responsiveness while expanding workload capacity 10X, 100X or more. If bottlenecks emerge, the design should localize them for efficient resolution.

Long-Term Scalability

Taking a “scale first” mentality sets the pipeline up for long term, sustainable growth. It ensures the system can adapt affordably to the types of large-scale deployments that will become necessary over the lifespan of many machine learning projects. Starting with scalability as the top priority establishes a solid foundation for the pipeline to efficiently support the organization as data sources and AI applications exponentially expand in scope and complexity.

Streamlining model evaluation and selection

Parallel Model Evaluation

Model evaluation and selection is an important step in any machine learning pipeline. It allows data scientists to assess how well a model performs on unseen data and identify the most accurate one for the task at hand. However, this process can become lengthy and repetitive if done manually. Automating evaluations is key to optimizing the pipeline.

Automation streamlines evaluating multiple models simultaneously. Data scientists can write scripts to quickly train and test various architectures, algorithms, and hyperparameter combinations in parallel. This bulk benchmarking identifies top-performing models faster by comparing results objectively. Where manual testing may take weeks, automation can evaluate hundreds of models in just hours or days.

Standardizing Comparisons

The automated process standardizes data preparation and splits, ensuring each model receives identical, unbiased inputs and validation data for testing. Precisely tracking experiments also facilitates consistent comparisons of key metrics like accuracy, precision, recall, F1 score, and AUC-ROC. With automated objective reporting, data scientists can easily sort results to pinpoint the top few models warranting further consideration.

Rather than arbitrarily selecting a single model for additional tuning, the top models emerging from bulk benchmarks then undergo rigorous validation through dedicated testing on held-out data. This confirms their real-world performance before a final selection. The process significantly reduces chances of overfitting or false conclusions from random samples.

By automatically tracking all experiments in a centralized system, data scientists gain valuable insights on methodology. They can easily revisit past results, configurations, parameters, dataset information, and metric scores as needed. Over time, this accumulates institutional knowledge on best practices. It also allows reviewing model performance evolution as data changes occur to identify retraining triggers earlier.

Adopting continuous integration/deployment practices

Automating the Rebuilding Process

Implementing a continuous integration and continuous deployment (CI/CD) approach can significantly speed up the machine learning development cycle. With CI/CD, any time a change is made to the code or models in a version control system, it will automatically trigger a rebuild and retest of the model. This rebuilding and retesting process is fully automated so it can occur rapidly without requiring manual intervention from data scientists or engineers.

If the model passes all tests during this automated rebuilding and retesting process, the updated model will then be automatically deployed into the production environment where it can be utilized. This means new training data, code improvements, bug fixes or other changes made to the model do not need to wait until the next formal release cycle to be implemented in production. Updates are seamless and continuously deployed as soon as changes pass automated tests.

Real-Time Model Updates

By facilitating a continuous feedback loop where changes trigger immediate redeployment, CI/CD allows companies to continuously refine their models based on the latest data and insights. As customers provide new data through usage or feedback, that information can be incorporated very quickly into improving the model currently in production. Similarly, if data scientists analyze performance and see ways to enhance the model, they can rapidly implement and test changes to deliver faster improvements.

Continuous Improvement

With CI/CD, companies no longer need to wait for periodic release cycles or manual deployments to benefit from enhancements. New training data is used as soon as it is collected rather than requiring a lag until the next release. Problems identified with the existing model can be addressed through rule changes or retraining right away rather than being deferred until later. This continuous cycle of updating and redeploying models means the latest and best-performing versions are always in production.

Equally important is that CI/CD facilitates regular monitoring of models even after they have been deployed. Any anomalies in real-world performance trunks compared to test or training data can be caught rapidly. Issues may then be addressed through rule updates, data reviews, or retraining before larger problems emerge. This monitoring closes the loop and helps ensure models continue serving their intended purpose as effectively as possible over time with changing conditions.

Monitoring models post-deployment

Tracking Model Performance

Once a machine learning model is deployed, continuous monitoring is crucial to ensure it performs as expected over time. The model deployment stage marks the beginning of an iterative process where the model's ongoing performance is tracked and improvements are made when needed. As new data is fed to the model, monitoring provides visibility into its prediction accuracy and helps detect any decline in performance.

There are several important aspects to consider as part of monitoring models in production. The first is tracking key metrics that measure the model's predictive ability against actual outcomes. For classification models, this involves metrics like accuracy, precision, recall and F1 score calculated on new data. For regression models, metrics like mean absolute error and root mean square error are appropriate. Computing these on a regular basis allows tracking changes in the model's effectiveness over time.

Another important part is monitoring for concept drift, which occurs when the statistical properties of the data change in unforeseen ways. This could be due to changes in the underlying population or processes. Concept drift can reduce a model's ability to generalize. Monitoring for such shifts by comparing current metrics to baselines helps determine if retraining is required.

Diagnosing Performance Issues

Diagnosing the root causes of performance issues is also a crucial part of monitoring. Tools like model explanation techniques can provide insight into factors affecting predictions. Logs detailing predictions and exceptions help debug and fix any bugs or outliers. It is also important to have centralized monitoring dashboards aggregating all these metrics and alerts to get a holistic view of model performance.

When dips in accuracy or other problems are detected, the monitoring process initiates further model improvement. This includes re-examining the data for distribution shifts, retraining the model on newly collected data, optimizing hyperparameters, or even selecting a new model if required. The retrained model then goes through another cycle of deployment and monitoring.

Conclusion

In conclusion, by streamlining all the stages of a machine learning pipeline with automation, parallelization, modularization and continuous integration practices, the time taken to deploy new machine learning models can be significantly reduced. This allows data scientists and ML engineers to rapidly iterate on their models for tackling dynamic real-world use cases more effectively. Optimized pipelines are thus a definitive competitive advantage for any data-driven organization.