Choosing the Right Deep Learning Model: A Comprehensive Guide

Deep learning models have become the most advanced approaches in the field of artificial intelligence technology, which has led to the development of several different domains including image recognition, natural language processing, and creating self-ruling systems. It is therefore important to understand these models and the deep learning algorithms that lay behind them to make the most out of such models in solving real-life problems and enhancing technological growth. This article focuses on the description and comparison of various deep learning models in terms of their distinctive features and usage.

What are Deep Learning Models?

Deep learning models can be categorized under the broad category of machine learning that incorporates a neural network with several hidden layers to learn from the big data. Such models are engineered to emulate the networks of the human brain in decision-making, hence can learn with very little supervision. Key characteristics of deep learning models include:

-

Multiple Layers: Contrary to the other machine learning models, deep learning models are made of several layers of artificial neurons where each layer extracts and transforms features from the input data. These are the input layer, a number of hidden layers, and the output layer.

-

Hierarchical Feature Learning: The multiple layers allow deep learning models to differentiate the data into hierarchical levels. At the first layers, details such as edges in images are detected and at the final layers, more complicated structures like objects and faces are detected.

-

End-to-end Learning: End-to-end learning is learning from input to output without the need for feature extraction; this can be made possible by deep learning models.

-

High Performance: Using large data sets, deep learning models can achieve near-perfect accuracy in a wide range of use cases, including image classification, language translation, and speech understanding.

The neural networks are trained using optimization techniques, including but not limited to gradient descent and backpropagation, to adjust the weights of the system. This process makes the models adapt to improved accuracy and generalize well to the new unseen data set. In a nutshell, deep learning models have marked a new era in artificial intelligence through the development of relevant, powerful tools, capable of solving many different problems within a variety of domains.

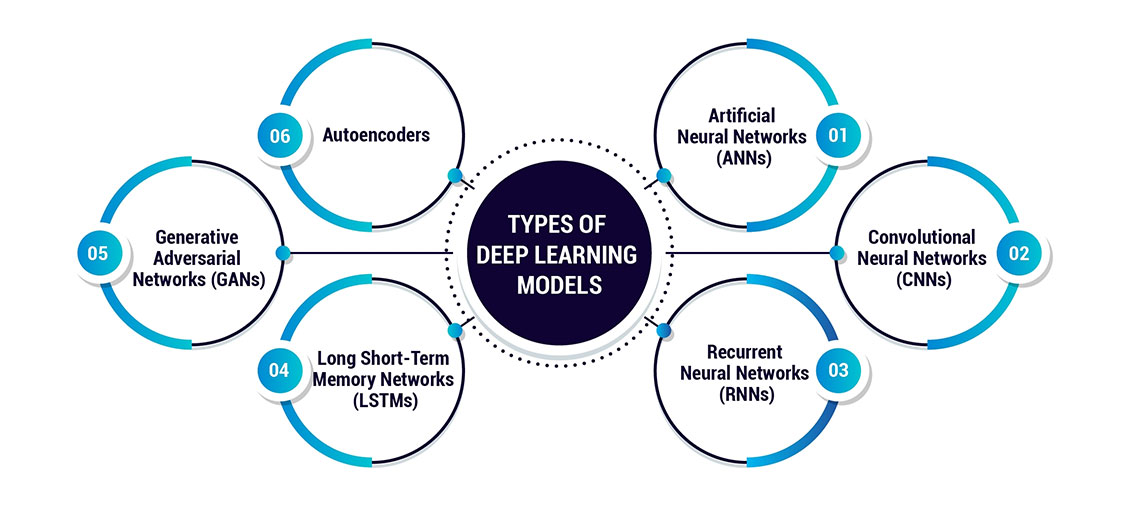

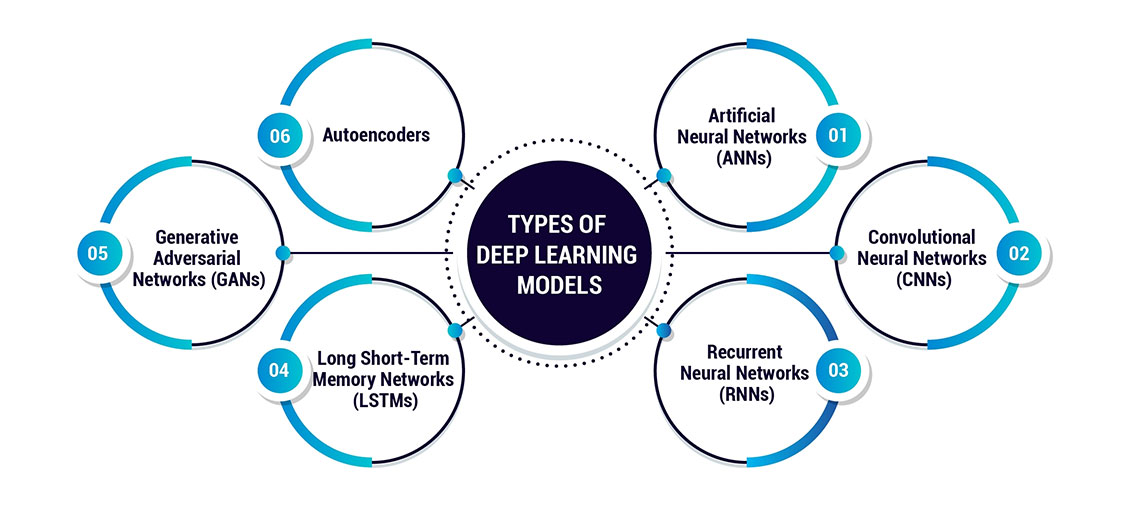

Types of Deep Learning Models

Deep learning models have dramatically advanced artificial intelligence because they can process data and make decisions based on their learned patterns. Hence, each type of deep learning model is suitable for different uses and was created with certain objectives in mind. Here’s a detailed overview of some prominent deep-learning models:

1. Artificial Neural Networks (ANNs)

Most deep learning models are based on ANN, which is commonly the initial point to begin with deep learning. These are composed of layers of interconnected neurons; each connection is characterized by a weight that gets changed during modeling. ANNs are easy to use on multiple types of data, including numerical data, images, and text, to mention but a few.

-

Input Layer: Receives raw data.

-

Hidden Layers: Perform computations using activation functions.

-

Output Layer: Produces the final prediction or output.

Example Code Snippet:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Define the ANN model

model = Sequential([

Dense(64, activation='relu', input_shape=(input_dim,)),

Dense(32, activation='relu'),

Dense(1, activation='sigmoid')

])

2. Convolutional Neural Networks (CNNs)

CNNs are inherently good at handling grid-like data, like images. They apply convolutional layers to learn feature hierarchies spatially and adaptively as well. Key components include:

-

Convolutional Layers: Apply convolutional operations to the input.

-

Pooling Layers: Reduce dimensionality by downsampling.

-

Fully Connected Layers: Combine features to make predictions.

Example Code Snippet:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Define the CNN model

model = Sequential([

Conv2D(32, (3, 3), activation='relu', input_shape=(height, width, channels)),

MaxPooling2D((2, 2)),

Flatten(),

Dense(64, activation='relu'),

Dense(num_classes, activation='softmax')

])

3. Recurrent Neural Networks (RNNs)

RNN is a type of artificial neural network used with sequence data such as time series data or natural language. They have connections that enable information to be maintained over several steps. RNNs are especially efficient in language generation and analysis and in time series prediction.

4. Long Short-Term Memory Networks (LSTMs)

Long short-term memory networks (LSTMs) help to overcome the issue of gradient vanishing that is experienced with the normal RNNs. They bring in memory cells that enable the network to learn long-term dependencies. Key elements include:

-

Input Gate: Controls the flow of input data.

-

Forget Gate: Decide what information to discard.

-

Output Gate: Determines what to output.

Example Code Snippet:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

# Define the LSTM model

model = Sequential([

LSTM(50, return_sequences=True, input_shape=(timesteps, features)),

LSTM(50),

Dense(1)

])

5. Generative Adversarial Networks (GANs)

GANs consist of two main parts: a generator and a discriminator. The generator produces the fake data, and the discriminator’s purpose is to classify the data as real or fake. This process benefits both networks as they are forced to compete with the other’s techniques.

6. Autoencoders

Autoencoders are used in unsupervised learning applications including feature learning and dimensionality reduction. They include an encoder used to compress the data and a decoder used to reconstruct the data.

Example Code Snippet:

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense

# Define the Autoencoder model

input_layer = Input(shape=(input_dim,))

encoded = Dense(64, activation='relu')(input_layer)

decoded = Dense(input_dim, activation='sigmoid')(encoded)

autoencoder = Model(input_layer, decoded)

encoder = Model(input_layer, encoded)

Every deep learning model has its function and has its advantages as well as disadvantages. Knowing these models is useful in identifying the most suitable model to use in a certain problem.

Detailed Comparison of Deep Learning Models

When comparing different deep learning models, it is crucial to consider several factors to ascertain their appropriateness and limitations. The comparison will be made based on accuracy, training time, complexity, interpretability, and scalability.

-

Accuracy

Among the most vital factors that determine the suitability of a deep learning model, accuracy is usually on top of the list. Most of the applications of CNNs are in image recognition because they can model spatial hierarchies. On the other hand, RNNs, particularly LSTMs, are more efficient for sequential data such as time series or natural language processing. Generative Adversarial Networks (GANs) also produce very accurate results in the generation of data.

-

Training Time

Training time is quite different for every model depending on the complexity of the model. Artificial Neural Networks (ANNs) are comparatively fast to train because of their relatively simple architecture. CNNs, although being quite complex, can be effectively trained on today’s GPUs. RNNs and LSTMs are slower in training since they are sequential and therefore the gradient must be back-propagated through time. This is because GANs entail the training of two networks at the same time which is time-consuming.

-

Complexity

Another important factor that should be considered is the model complexity. There are three types of ANNs, and one is the feed-forward, which is the simplest and has fully connected layers. CNNs are even more complicated due to their convolutional and pooling layers that demand better architecture design. RNNs and LSTMs increase the complexity even more because they work with sequences and require memory state management. Of all the models, GANs are the most complicated because they are composed of two networks, the generator, and the discriminator.

-

Interpretability

Explainability or the capability to explain model thinking is crucial in many domains. ANNs have a decent level of interpretability since their layer connections are rather simple. The reason is that the CNNs are more complex than the ANNs; however, they can be explained to some extent using activation maps. RNNs and LSTMs are less interpretable than the other models discussed because of the management of internal states. The least is GANs due to their oppositional learning and the difficulty in comprehending how the output is created.

-

Scalability

Scalability describes the ability of a model to extend the volume of data and computational power. CNNs and ANNs get better with more data and bigger models. It has been observed that while using RNNs and LSTMs, if the sequence length increases, then it becomes a challenge to handle and there are additional issues of vanishing and exploding gradients. GANs can be scaled up but then the problem of training becomes a problem as the size of the model increases.

The choice of the deep learning model to use in a particular task is influenced by factors such as the type of data, the level of accuracy desired, the amount of computational power available, and the need for model interpretability. Knowledge of these aspects enables one to make a good decision to achieve the best results from the model.

Code Snippets and Practical Examples

The architecture of deep learning models can be developed and evaluated on different frameworks and libraries. In the following, we will discuss some examples of different deep learning models with code implementation.

Example 1: Building a Simple CNN for Image Classification

A convolutional neural network (CNN) is one of the most reliable algorithms for image classification. Here's a basic implementation of a CNN using TensorFlow/Keras:

Code Snippet:

import tensorflow as tf

from tensorflow.keras import layers, models

# Define the CNN model

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(64, 64, 3)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax') # Assuming 10 classes

])

# Compile the model

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# Summary of the model

model.summary()

This code provides a simple CNN model with three convolutional layers and then max-pooling layers, and then fully connected dense layers. The model is trained using the Adam optimizer and sparse categorical cross-entropy loss, which is appropriate for classification problems.

Example 2: Implementing an RNN for Text Generation

Recurrent Neural Networks (RNNs) are designed for sequence prediction. Here’s an example of using an RNN for text generation:

Code Snippet:

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras import layers, models

# Sample text data

texts = ["hello world", "machine learning is fun", "deep learning is exciting"]

# Tokenize the text

tokenizer = Tokenizer()

tokenizer.fit_on_texts(texts)

sequences = tokenizer.texts_to_sequences(texts)

X = pad_sequences(sequences)

# Define the RNN model

model = models.Sequential([

layers.Embedding(input_dim=len(tokenizer.word_index)+1, output_dim=50, input_length=X.shape[1]),

layers.SimpleRNN(64, return_sequences=True),

layers.Dense(len(tokenizer.word_index)+1, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# Summary of the model

model.summary()

In this example, we apply an RNN to sequence data of textual information. The ‘Embedding’ layer converts words into dense vectors, followed by a ‘SimpleRNN’ layer to capture temporal dependencies. Based on the sequence prediction, the model is compiled with the Adam optimizer.

Example 3: Training a GAN for Image Generation

Generative Adversarial Networks (GANs) consist of a generator and a discriminator. Here's a basic setup for a GAN:

Code Snippet:

from tensorflow.keras import layers, modelss

# Define the generator

def build_generator():

model = models.Sequential([

layers.Dense(128, activation='relu', input_dim=100),

layers.BatchNormalization(),

layers.Dense(784, activation='sigmoid'),

layers.Reshape((28, 28, 1))

])

return model

# Define the discriminator

def build_discriminator():

model = models.Sequential([

layers.Flatten(input_shape=(28, 28, 1)),

layers.Dense(128, activation='relu'),

layers.Dense(1, activation='sigmoid')

])

return model

# Compile the GAN

generator = build_generator()

discriminator = build_discriminator()

discriminator.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

discriminator.trainable = False

gan_input = layers.Input(shape=(100,))

x = generator(gan_input)

gan_output = discriminator(x)

gan = models.Model(gan_input, gan_output)

gan.compile(optimizer='adam', loss='binary_crossentropy')

# Summary of the models

generator.summary()

discriminator.summary()

gan.summary()

In this code, we create a simple GAN with a generator that generates images from noise and a discriminator that aims at distinguishing between real and generated images. Both models are trained using the Adam optimizer and binary cross entropy as the loss function.

These code snippets give the reader a starting point for working with and exploring various deep-learning models. Using these examples and adjusting parameters will further enhance the understanding of deep learning algorithms and their real-life implementations.

Conclusion

Deep learning models are powerful, but to apply them effectively, it is necessary to learn how to work with them. Appreciating and understanding the subtlety of various deep learning algorithms enables professionals to choose and fine-tune the models based on specific contexts, thereby fostering the growth of technology and inventions. This implies that, the identification of new trends and the overcoming of the current limitations will be critical to the effective use of deep learning models in real-world applications.