Efficient Fine-Tuning of Large Language Models with LoRA

Large language models have become highly beneficial in artificial intelligence applications as they expand NLP possibilities. However, continuing large language models from the starting point remains laborious regarding computational resources and memory. Low-Rank Adaptation for LLMs (Low-Rank Adaptation of models) is an excellent solution as it introduces an approach to decreasing the number of trainable parameters. It makes the approach highly portable, less costly, and more time-effective, which is highly suitable for domain-specific large language models but not at the cost of precision.

Fundamentals of Fine-Tuning Large Language Models

Fine-tuning is a necessary process that enables LLMs to focus on specific tasks in a particular domain, as it does not erase the most important general knowledge. Pre-training, on the other hand, is the process of training new models from scratch through large datasets. At the same time, fine-tuning of LLMs entails sampling human tasks with ostensibly smaller datasets, with a certain amount of tweaking made on a large language model constantly used in our projects.

Challenges of Traditional Fine-Tuning

-

Compute Costs: Standard fine-tuning is not cheap and might involve GPUs or TPUs, which are known to impact operational costs.

-

Memory Constraints: Fine-tuning large models poses a significant drawback because they may occupy large amounts of video RAM (VRAM), which makes it challenging to deploy them on hardware with limited memory.

-

Overfitting Risks: Training on limited datasets without proper regularization can lead to models that memorize rather than generalize.

The Role of Parameter-Efficient Fine-Tuning (PEFT)

The parameter-efficient method has been developed to achieve the same performance with fewer parameters to solve these issues. PEFT techniques only change the model's selected weights, making them faster and cheaper than others.

What is LoRA for LLMs?

LoRA for LLMs is a modern approach that aims to fine-tune large language models more efficiently by applying low-rank approximation to the model’s weight matrices. Previous fine-tuning approaches can be time-consuming and computationally intensive because they involve retracing the entire model from scratch. To overcome this challenge, LoRA for LLMs aims to modify only a small set of extra parameters to keep the models concise and high performing.

Key Aspects of LoRA for LLMs:

-

Efficiency: Unlike the original weight matrix, LoRA for LLMs applies a low-rank matrix to change only several parameters, which reduces the number of parameters that need to be adjusted.

-

Cost-effective: The algorithm is well-suited for large-scale problems since it reduces the training time and resources needed for the model's size.

-

Flexible Integration: LoRA for LLMs can be easily incorporated into various models without admitting significant changes to the structure, which is another strength of the method.

How LoRA Works: The Technical Breakdown

LoRA for LLMs uses low-rank adaptation to fine-tune large language models with minimal modification of the model’s parameters. In contrast to other weight-modification strategies for LLMs, LoRA only adds trainable low-rank matrices. This dramatically reduces the number of parameters learned in the fine-tuning phase, making it memory—and computationally efficient.

Fundamental Principles of LoRA:

-

Low-rank approximation: LoRA divides the weight matrices within the model into a product of two matrices with fewer dimensions. This is better than adding bunches of parameters during fine-tuning, since this effectively contaminates the model’s learning capability.

-

Efficient updates: Updates are done on a portion of weights, providing a lower training cost without affecting the model's ability to adapt to other tasks.

-

Minimal impact on original model: LoRA increases the model weights by adding small, low-rank matrices rather than adjusting the base model’s weights, which helps to retain its essential functionality and avoid overtraining.

Implementing LoRA for Large Language Models

LoRA for LLMs consists of several steps that enable fine-tuning pre-trained models easily and with little computational overhead. The following are the steps on how to enter this process:

-

Environment Setup: The first step is to create a Python environment suitable for neural network-related tools and the dependencies for the model training process. Check that your system has all the requirements, such as appropriate GPUs and CPU power for the computations.

-

Selecting a Base Model: Based on the nature of your task, select a large language model with which you wish to work, whether it is a transformer-based architecture or any other architecture. The base model is the starting point when applying LoRA for LLMs.

-

LoRA Integration: Incorporate the low-rank adaptations by scaling the weights of some layers in the model. This operation also increases the model's scalability because it allows for modifying its behavior without redefining all the parameters. LoRA is usually performed with low-rank matrices added to the weight matrices, and this can be learned quickly with manageable time complexity.

Code Snippet: Implementing LoRA in Training

Before applying LoRA, we need to load a pre-trained model and introduce low-rank adaptation to specific layers:

from transformers import AutoModel

import torch

# Load a pre-trained model

model = AutoModel.from_pretrained('pretrained-model-name')

# Define low-rank matrices for LoRA integration

lora_weights = torch.nn.Parameter(torch.randn((model.config.hidden_size, model.config.num_attention_heads)))

# Modify attention layers with LoRA

model.encoder.layer[0].attention.self.query.weight += lora_weights

In the above snippet, a pre-trained model is loaded, and LoRA for LLMs is applied by introducing a low-rank weight matrix to the self-attention mechanism. This method ensures efficient fine-tuning by altering only a fraction of the model’s parameters.

-

Fine-tuning: After training LoRA for LLMs, commence fine-tuning the model on your dataset. Since only the low-rank matrices are tuned in this method, fine-tuning is much faster and consumes less memory than retraining the model all over again.

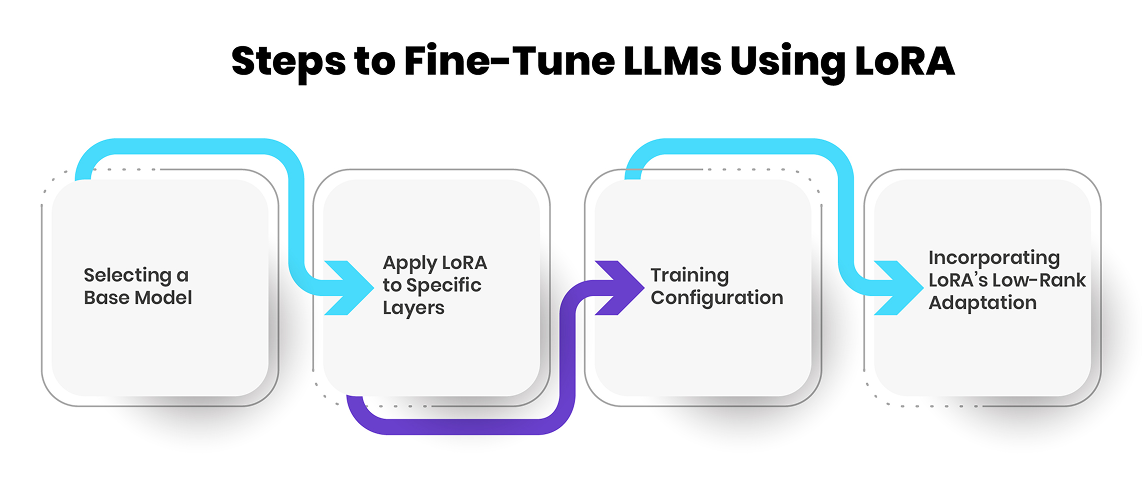

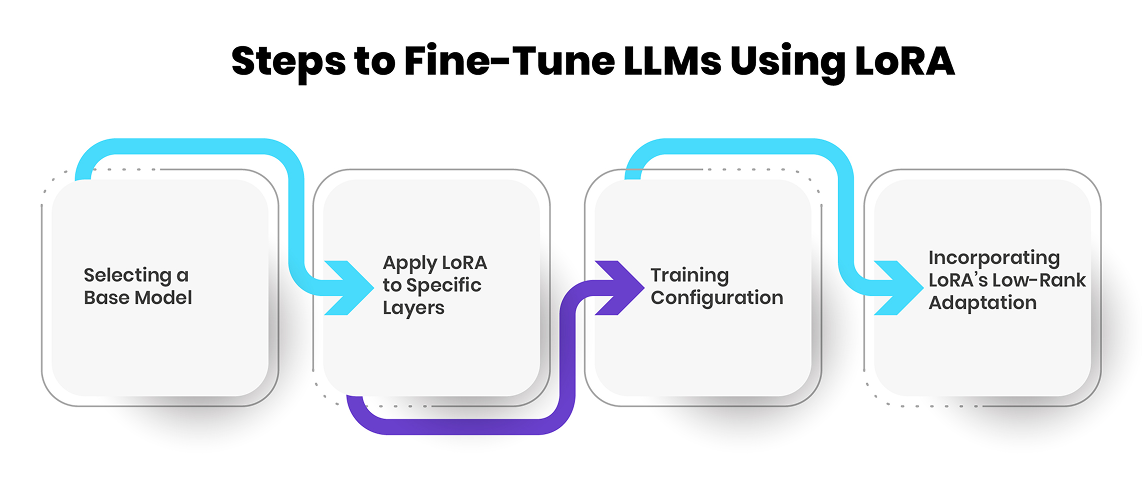

Practical Steps to Fine-Tune LLMs Using LoRA

Adapting LLMs by fine-tuning the pre-trained model using LoRA is a practical approach for fine-tuning large language models when the resources cannot support training from scratch. Here are the possible steps that can be followed to help LLMs implement LoRA effectively:

First, select a large language model already pre-trained for your task. This could include general models and specific models falling under a particular area of specialization.

-

Apply LoRA to Specific Layers

Unlike the general approach of fine-tuning the whole model, LoRA for LLMs only adjusts particular layers, often the attention layers, by adding learnable matrices with low ranks. This means that the dimensions that call for fine-tuning are minimally reduced in number.

It is critical to establish the learning rate, the batch size, and the number of epochs during the model's training. LoRA has training benefits as it incorporates fewer resources, leading to good performance.

-

Incorporating LoRA’s Low-Rank Adaptation

-

LoRA presents small matrices of lower ranks, which are infused into the embedding layers of the model.

-

These matrices modify the model for specific tasks without changing the fundamental architecture of the model and its weights.

Code Snippet: Implementing LoRA in Training

The following code snippet demonstrates how to integrate LoRA for LLMs into a transformer-based model. This ensures only selected layers are fine-tuned, keeping computational costs low:

from transformers import AutoModelForSequenceClassification

from lora import LoRA

model = AutoModelForSequenceClassification.from_pretrained("base_model_name")

lora_model = LoRA(model, rank=4) # Adjust rank for LoRA's low-rank matrices

lora_model.train()

This implementation applies LoRA for LLMs to a large language model, focusing on key layers without updating the entire network. By leveraging LoRA, fine-tuning of large language models becomes more efficient and cost-effective.

Evaluating and Optimizing LoRA Fine-Tuning

It is paramount to assess the efficiency of LoRA for LLMs to guarantee control over the achieved objectives at the fine-tuned model and resource usage. Thus, accuracy, F1 score, and inference time can be vital for evaluating the model after fine-tuning. The ability to fine-tune an LLM using LoRA enables halving the number of update operations while employing much fewer bits, requires less training, and reduces expenses.

Key Evaluation Metrics:

-

Accuracy: Measures the overall correctness of the model's predictions.

-

F1 Score: It is a weighted average of accuracy and recall, suitable for classifiers with highly imbalanced classes.

-

Inference Speed: Make sure that it is possible to get an output from the fine-tuned model in real-time data processing applications.

Code Snippet: Evaluating Model Accuracy Post Fine-Tuning

The following code snippet demonstrates how to calculate the model’s accuracy after fine-tuning using LoRA for LLMs. It compares the model’s predicted outputs against the actual labels to measure performance.

from sklearn.metrics import accuracy_score

# Example of evaluating model accuracy after fine-tuning with LoRA

y_true = [0, 1, 0, 1] # True labels

y_pred = [0, 1, 1, 0] # Predicted labels

accuracy = accuracy_score(y_true, y_pred)

print(f"Model Accuracy: {accuracy:.2f}")

This snippet uses the accuracy_score function to compare predicted labels (y_pred) against the actual labels (y_true). A higher accuracy score indicates better fine-tuning effectiveness.

Optimizing LoRA Fine-Tuning:

-

Hyperparameter Tuning: Try different values for learning rate, size of data batches, etc., to achieve the best convergence and accuracy.

-

Cross-Validation: Use the test set partition method to check the generation ability.

-

Regularization: Use the techniques needed to avoid high variance while attaining model invariance.

Use Cases of LoRA in Large Language Models

Adopting LoRA for LLMs is changing the landscape of AI applications in different sectors by extending a highly efficient, fast, and cost-effective way of fine-tuning LLMs. Its suitability in developing exact and specialized models for a low hardware cost brings it closer to the organizational goal of deploying AI solutions.

The Following are the Key Applications of the LoRA Structure for LLMs:

-

Chatbot and Conversational AI: LoRA can help them adjust the chatbots for particular fields, like healthcare, finance, or customer service, without changing all the LLMs. This leads to faster response rates and better positioning of responses in context.

-

Sentiment Analysis & Text Classification: Corporations use it to develop specialized language models for LLMs, which helps organizations manage their existing sentiment analytic processes. This allows businesses to monitor customer sentiments and brand reputation more accurately.

-

Domain-Specific AI Assistants: LoRA helps adapt AI assistants for legal, medical, or technical domains to incorporate domain knowledge.

Challenges and Future Directions in LoRA Fine-Tuning

Despite the overall usefulness of LoRA for LLMs, some issues that prevent its proper use in complex AI systems remain. Notable concerns include:

-

Handling Highly Dynamic Datasets: LoRA performs dismally when confronted with highly fluctuating tables that must be updated continuously. The low-rank solution may not transfer properly to other continuous learning situations.

-

Trade-off between performance and efficiency: Although LoRA effectively lowers training costs, several tasks might necessitate complete fine-tuning to obtain the best results.

-

Scalability Constraints: The method achieves parameter reuse, but this is set to work well under single, happily ended architectures and not multi-modal ones.

Future Directions

-

Enhanced Adaptation Mechanisms: Some follow-up work is being done on the modifications of LoRA, along with relatively sparse fine-tuning to uplift the adaptation strategies.

-

Automated Hyperparameter Tuning: Integrating AI into LoRA’s tuning mechanism can improve LoRA without manual tuning.

-

Improved Evaluation Metrics: Developing better benchmarks for LoRA’s real-world performance beyond traditional accuracy assessments.

Conclusion

LoRA for LLMs has cost-effectively enhanced the fine-tuning process of large language models. It reduces computational complexity while maintaining performance, which is suitable for industrial applications. However, given the dynamic nature of some datasets, this technique is steadily improving. Shortly, LoRA for LLMs will become helpful in large-scale and cost-efficient AI development because of the increasing need for optimized models.