Graph Neural Networks and Their Applications

Graph neural networks (GNNs) are revolutionizing the way we analyze interconnected data across domains. Whether it's modeling social networks, predicting protein interactions, or even generating realistic molecular graphs, GNNs are at the forefront.

This article explores these powerful deep learning architectures. We'll cover GNN basics, popular variants like GCNet and GAT, real-world applications of GNNs, as well as challenges and future directions.

What Exactly Are Graph Neural Networks?

In essence, GNNs are neural networks capable of processing graph-structured data. This includes anything from social networks to biological protein-interaction networks to even public transit systems.

Formally, a graph contains nodes (vertices) and edges connecting node pairs. Each node and edge can hold useful feature information. Unlike images or text which have a grid-like structure, graphs can be highly complex and variable. This is where Graph Neural Network comes in.

GNN architectures leverage message-passing algorithms to aggregate neighborhood features for each node. This gives every node a convolved feature representation of its local graph structure. The neural network layers then operate on these learned node embeddings to output relevant predictions.

For instance, GNNs can classify phenotype roles of individual proteins in a biological network based on their connections. The key benefit is explicitly encoding domain structure within the neural network itself.

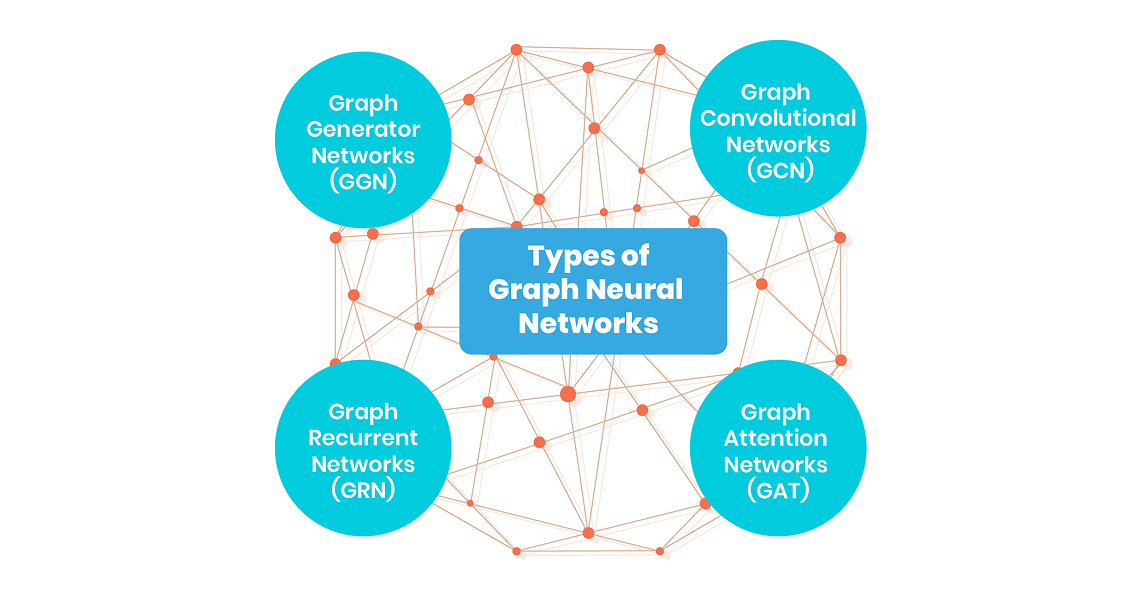

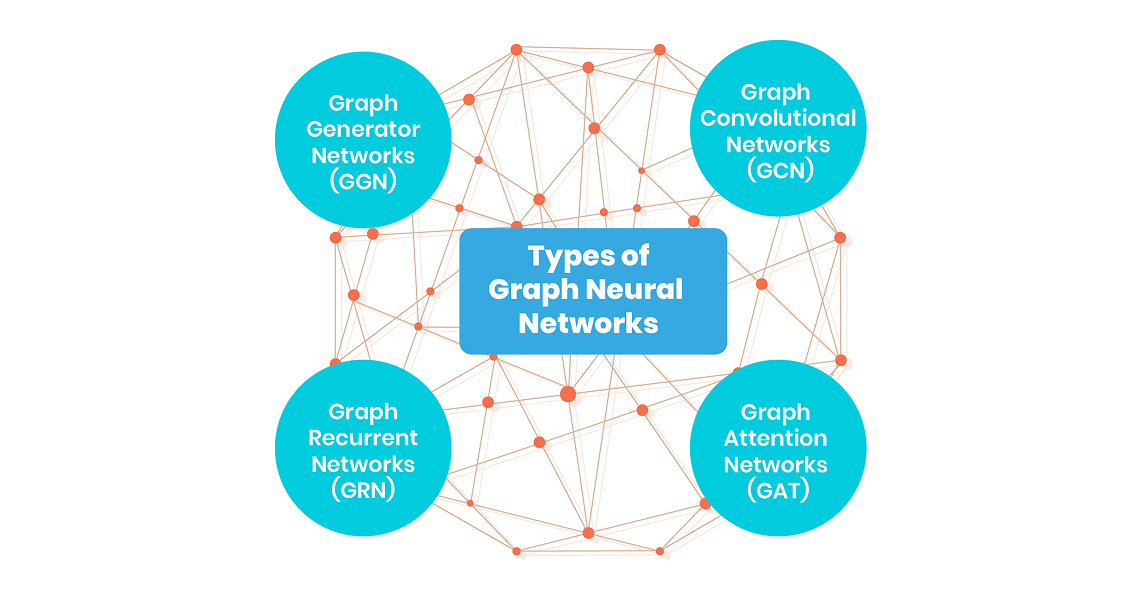

Types of Graph Neural Networks

There are several types of graph neural networks, each with unique strengths:

1. Graph Convolutional Networks (GCN)

Graph convolutional networks (GCNs) are inspired by convolutional neural networks used for grid-based data like images. The key idea in GCNs is propagating information between node neighbors using convolutional filters.

In traditional CNNs, the convolutional filters slide over the input matrix using shared weights to extract spatial features. In GCNs, the filters operate on the graph structure to aggregate feature information from neighboring nodes. This allows each node to incorporate its neighbors' features, including multi-hop neighbors further away on the graph.

As the layers increase, nodes gather data from a wider neighborhood - exactly analogous to increasing the receptive field in CNNs. A key innovation was introducing efficient localized convolutional filters that could scale to large graphs with thousands or millions of nodes. An efficient layer wise propagation rule was proposed in the seminal "Semi-Supervised Classification with Graph Convolutional Networks" paper (Kipf & Welling, ICLR 2017). This made GCNs feasible on much larger graphs than earlier attempts.

GCNs opened up previously impossible analysis on large real-world graphs like social networks, recommendation systems, protein-interaction networks, and more. Later work improved their accuracy, scalability and interpretability. Variants like GraphSage and FastGCN made them more computationally efficient.

2. Graph Attention Networks (GAT)

Graph attention networks incorporate attention mechanisms when aggregating information from neighboring nodes. The key motivation is that in real graphs, not every neighbor provides equally useful information from a node's perspective.

For example, in a social network, close friends may be more influential than acquaintances when classifying user attributes. Similarly, directly connected proteins likely play a bigger role than distant ones for biological predictions.

GATs implement this by weighting neighbors differently based on an attentional scoring function before feature aggregation. This allows adapting the model better to varied graph connectivity patterns.

The seminal "Graph Attention Networks" paper (Velickovic et al., ICLR 2018) first proposed adding multi-head attention layers within a standard GCN architecture. Each attention head focuses on different graph aspects, and the embeddings they produce get concatenated before

further processing. This allows increased expressiveness to handle diverse graph analytical tasks.

Later GAT variants improved efficiency and accuracy, with applications in graph classification, recommendation systems and modeling biochemical protein networks

3. Graph Recurrent Networks (GRN)

Graph recurrent networks leverage key concepts from recurrent neural network architectures like LSTMs and GRUs. The motivation is modeling dynamic graphs that evolve over time, like social networks or transaction networks

Recurrent models contain inner loop memory units that enable capturing temporal state. Analogously, graph RNNs update node states using previous states of neighboring nodes. This inherently suits dynamic graphs where nodes interact over time.

The "GraphRNN: Generating Realistic Graphs with Deep Auto-regressive Models" paper (You et al., 2018) first proposed GRNs for generating graph sequences. Later variations like Graph Echo State Networks (ESNs) model diffusive processes on graph time series data.

Besides graph generation, GRNs broadly suit graph evolution modeling - like dynamic social interactions, traffic network flows or molecular dynamics simulations. The node recurrence captures complex spatial-temporal effects in a natural way.

4. Graph Generator Networks (GGN)

Graph generator networks are generative models capable of creating entirely new graphs with similar characteristics to a corpus of real-world input graphs. This allows creating plausible synthetic graphs for simulations, data augmentation etc.

Different adaptations of GANs and VAEs have been proposed for graph generation. They compete with two networks against each other - the generator tries creating realistic novel graphs, while the discriminator tries detecting fake ones. This adversarial dynamic allows for fitting complex graph distributions found in the real-world.

The "GraphGAN: Graph Representation Learning with Generative Adversarial Nets" paper (Wang et al., 2018) first proposed bridging GANs with graph analysis for this purpose. GGNs can mimic graphical properties like degree distribution, diameter, clustering coefficients etc. from real input graphs.

GGNs find diverse applications in anonymizing graphs by creating surrogates, modeling molecular compounds, generating infrastructure network topologies, and data augmentation for further GNN training.

Besides these, active research is expanding the boundaries of graph network architectures for specialized applications.

Real-world Applications of GNNs

Thanks to their innate graph processing capabilities, GNNs enable transformative solutions across domains:

-

Fraud Detection: Capturing financial transaction networks as graphs, GNNs can learn patterns to identify fraudulent accounts and activities.

-

Recommendation Systems: GNNs leverage complex user-item interactions to provide accurate personalized suggestions.

-

Drug Discovery: Protein-interaction networks and molecular graphs fed into GNNs can hugely accelerate discovering new biomarkers and drug candidates.

-

Image Recognition: Scenes can be represented as semantic graphs encoding objects and their relationships, perfectly suited for GNN scene understanding.

-

Natural Language Processing: Graphs model linguistic syntax and semantics effectively. GNNs tackle tasks like text classification, machine translation, summarization etc.

-

Traffic Forecasting: Road networks are spatio-temporal graphs with traffic data as node features. GNN forecasting outperforms traditional methods.

The list goes on from program verification to brain network analysis. We've only scratched the surface of domains benefiting from explicitly modeling structure with GNNs

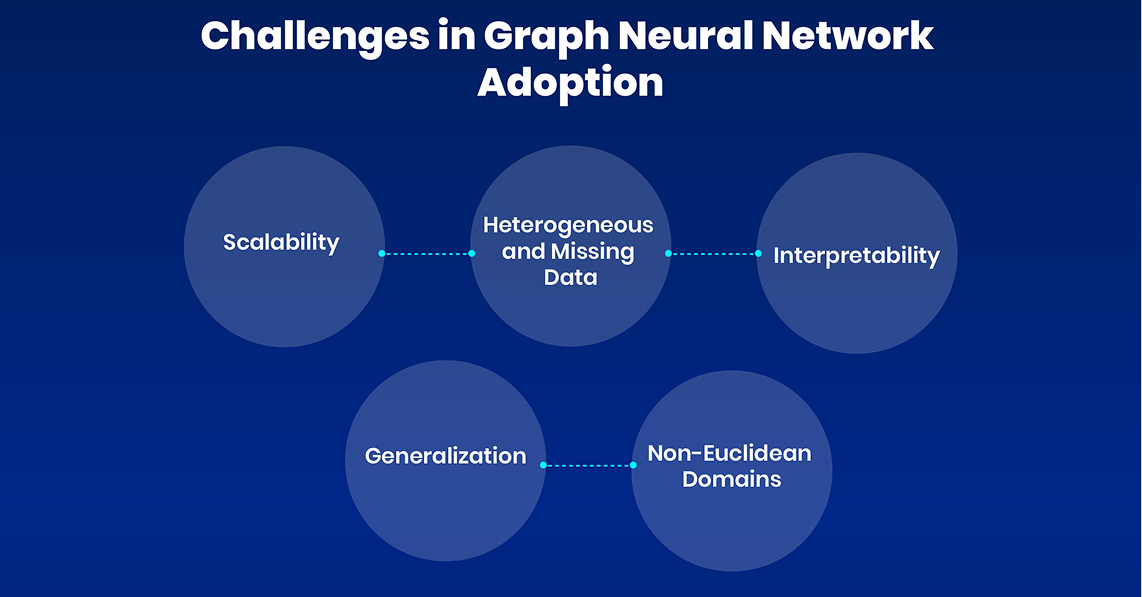

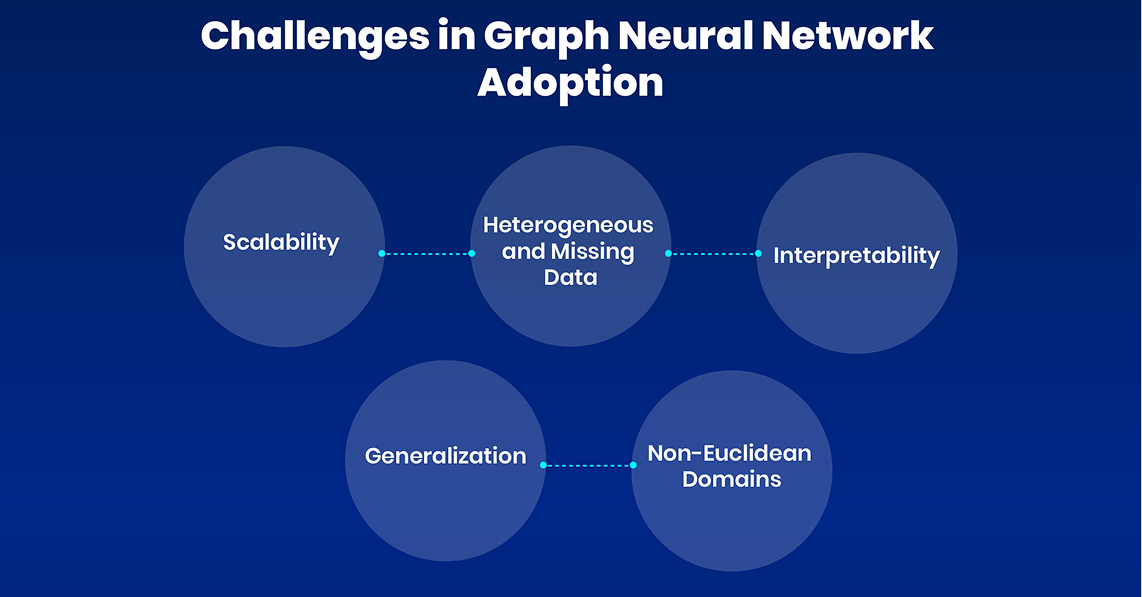

Challenges in GNN Adoption

While Graph Neural Networks (GNNs) have shown great promise in analyzing graph data across domains like recommender systems, drug discovery, and social network analysis, there remain some key challenges in unlocking their full potential:

Scalability

Analyzing massive graphs with billions of nodes poses significant computational challenges for GNNs. As the number of nodes and edges grows, the complexity for message passing and neural network training increases exponentially. This hampers the scalability of GNNs to industrial-scale graphs.

Some techniques to improve scalability include:

-

Parallelizing computation across GPUs or clusters

-

Developing efficient pooling methods to reduce graph size

-

Using sampling or approximation methods during training

-

Designing specialized data structures and algorithms optimized for sparse graph operations

However, scaling GNNs to the size of social networks or biological interaction networks, with trillions of edges, remains an active area of research.

Heterogeneous and Missing Data

Real-world graphs often contain varied data types (heterogeneous) and missing attribute values. This complicates GNN training and affects model performance.

Robust data preprocessing is necessary before feeding graphs to GNNs. This includes:

-

Handling variety of features like text, images, scalars

-

Imputing missing values

-

Mapping features to unified vector representations

-

Performing feature normalization and dimensionality reduction

Special techniques like relational GNNs show promise in directly modeling heterogeneity. But handling very sparse graphs with lots of missing data continues to be challenging.

Interpretability

Like other deep neural networks, GNN models behave like black boxes, offering little insight into their internal logic. This poses challenges in terms of model debugging, explanation and transparency

Some initiatives around GNN interpretability include:

-

Visualizing the propagation of node signals

-

Analyzing class activation maps to identify decisive graph regions

-

Capturing model attention scores and feature importance

-

Explaining predictions using techniques like LIME

But much work remains in making GNNs interpretable, especially as models become more complex.

Generalization

A key challenge is the limited generalization capacity of GNNs - models trained on a certain graph distribution tend to fail on even slightly different test graphs. This prevents portability across datasets.

Some ways to improve generalization:

-

Extensive hyperparameter tuning

-

Using model agnostic training frameworks

-

Augmenting graph structures during training

-

Leveraging transfer learning from related tasks

But evaluating model generalization ability remains largely unsolved.

Non-Euclidean Domains

Most GNN architectures focus on modeling Euclidean graph data. Extending their expressiveness to effectively process non-Euclidean graphs such as hyperbolic or spherical geometry graphs remains an open challenge.

Early research shows promise in using hyperbolic GNNs for hierarchical and high-curvature graphs. But a lot more effort is required to make such geometric GNNs production ready.

These open challenges spur innovative research advancing GNNs - fueled by an abundance of high-impact applications!

The Road Ahead for Graph Neural Networks

We've only scratched the surface of what's possible with GNNs. Here are promising research frontiers that could transform how AI solves real-world problems:

-

Multi-modal GNNs fusing graph data with other modalities like images or text for enhanced understanding.

-

Generative GNN models create highly realistic synthetic graphs preserving key graph statistics.

-

GNN advancements tackling fundamental barriers like scalability and model interpretability

-

Graph reinforcement learning combining the power of graphs and RL for new breakthroughs.

-

Automated graph neural architecture search tailored to application needs.

By meshing graph analytics with deep learning, GNNs are already excelling across domains where explicitly modeling relationships is key. As research hurdles forward, these versatile architectures hold profound potential to push boundaries of what AI can achieve. The GNN revolution has only just begun!