How do Large Language Models Work? How to Train Them?

In recent years, Large Language Models (LLMs) have emerged as powerful tools in artificial intelligence. They have revolutionized how we interact with technology. These models, such as GPT-3 by OpenAI and BERT by Google, are designed to understand, process, and generate human language with remarkable accuracy.

LLMs have a wide range of applications across industries, from content creation and customer service to healthcare and language translation. Their ability to generate human-like text and perform complex language tasks has made them invaluable in streamlining processes. Further improving productivity and enhancing user experiences.

Despite their advancements, LLMs also pose challenges, including issues related to bias and environmental impact. As we continue to explore the capabilities of these models, it's essential to understand how they actually work.

What is a Large Language Model?

A Large Language Model (LLM) is a sophisticated form of artificial intelligence (Ai) designed to understand, process, and generate human language. Like all language models, LLMs learn the patterns, structures, and relationships within a given language. However, what sets LLMs apart is their sheer size and complexity.

Traditionally, language models were used for narrow Ai tasks such as text translation. However, with advancements in hardware capabilities, the availability of vast datasets, and improvements in training techniques, the field of natural language processing has seen a shift towards building larger and more powerful models. These large language models, with billions of parameters, have significantly more computational requirements and training data than their predecessors.

One of the most notable examples of an LLM is GPT-3 (Generative Pre-trained Transformer 3), which has garnered attention for its ability to generate remarkably human-like text. Despite their advancements, LLMs are not without challenges, including issues related to bias and ethical concerns.

In essence, LLMs represent a significant advancement in Ai algorithms, offering immense potential for innovation and improvement across a wide range of fields.

Some examples of Large Language Models:

-

GPT-3 (Generative Pre-trained Transformer 3) by OpenAI. It is known for its advanced natural language generation capabilities.

-

BERT (Bidirectional Encoder Representations from Transformers) by Google. It excels in understanding context in language.

-

T5 (Text-to-Text Transfer Transformer) by Google. It is designed for various natural language processing tasks.

-

XLNet by Google Brain and Carnegie Mellon University. It improves on BERT's pretraining strategy.

-

RoBERTa (A Robustly Optimized BERT Approach) by Facebook AI. It is a variant of BERT with improved training techniques.

These models have been pivotal in advancing natural language processing and have numerous applications in Ai research and development.

How do Large Language Models Work?

Large Language Models (LLMs) operate on the principles of deep learning. They leverage neural network architectures to process and understand human languages. These models are typically based on transformer architectures, which use self-attention mechanisms to capture relationships between tokens in a sequence.

During training, LLMs are exposed to vast amounts of text data through a process called pre-training. This phase involves training the model on a diverse dataset to learn the nuances of language. Following pre-training, the model undergoes fine-tuning on specific tasks to enhance its performance in areas such as sentiment analysis, machine translation, or question answering.

One of the key innovations in transformer architecture is the self-attention mechanism. It allows the model to weigh the importance of different tokens in a sequence. This mechanism enables LLMs to capture complex language patterns and dependencies. This further makes them effective at generating coherent and contextually relevant text.

Additionally, LLMs employ tokenization methods to convert raw text into smaller units, such as words, subwords, or characters, for processing. These methods help the model understand the structure of the input text and improve its ability to generate accurate and meaningful responses.

LLMs represent a significant advancement in natural language processing, with the potential to revolutionize various industries by enabling more intuitive and natural interactions between humans and machines.

How are Large Language Models Trained?

Training a Large Language Model (LLM) involves two main stages: Pre-training and Fine-tuning.

-

In the pre-training stage, the model is exposed to a large, general-purpose dataset to learn high-level language features. This helps the model understand the basics of language, similar to how we learn language rules and vocabulary.

The pre-training process begins by pre-processing the text data to convert it into a format that the model can understand. The model's parameters are then randomly assigned, and the numerical representation of the text data is fed into the model. Using a loss function, the model's outputs are compared to the actual next word in a sentence, and its parameters are adjusted to minimize this difference. This process is repeated until the model's outputs reach an acceptable level of accuracy.

-

Once pre-training is complete, the model can be fine-tuned for specific tasks using new, task-specific data. Fine-tuning involves exposing the model to this new data and adjusting its parameters to better fit the new task. This allows the model to adapt its learned features to the specific requirements of the task, such as sentiment analysis or text summarization. Fine-tuning is more computationally efficient than pre-training and requires less data and power, making it a cost-effective method for adapting the model to different use cases.

Challenges in Training Large Language Models

Training Large Language Models (LLMs) comes with its share of challenges.

-

Computational Power: Training LLMs requires significant computational resources, making it expensive and resource intensive. Setting up the required computing power for training can cost millions of dollars.

-

Training Time: It can take months to train a large language model. Even after training, fine-tuning by humans is often necessary to achieve optimal performance.

-

Data Collection: Acquiring a large and diverse text corpus for training can be challenging. Also, there are concerns about the legality of data collection practices.

-

Environmental Impact: Training LLMs has a significant environmental impact, with some models generating carbon footprints equivalent to several cars over their lifetime. This raises concerns about the sustainability of large-scale Ai training practices in the era of climate change.

What are the Advantages of Large Language Models?

Large Language Models (LLMs) offer several advantages that make them valuable tools in various applications.

-

Human-like Text Generation: LLMs can generate text that closely resembles human writing. Further making them useful for content creation, translation, and conversational interfaces.

-

Efficiency and Productivity: LLMs can automate tasks such as email writing, document summarization, and code generation. This helps in freeing up human resources for more strategic work.

-

Accessibility: LLMs enable more intuitive interactions with technology, benefiting individuals with disabilities or those who are not proficient in traditional computer interfaces.

-

Zero-shot Learning: LLMs can generalize to new tasks without explicit training, allowing for adaptability to new applications and scenarios.

-

Handling Large Datasets: LLMs are efficient at processing vast amounts of data, making them suitable for tasks that require a deep understanding of extensive text corpora.

-

Fine-tuning Capability: LLMs can be fine-tuned on specific datasets or domains, enabling continuous learning and adaptation to specific use cases or industries.

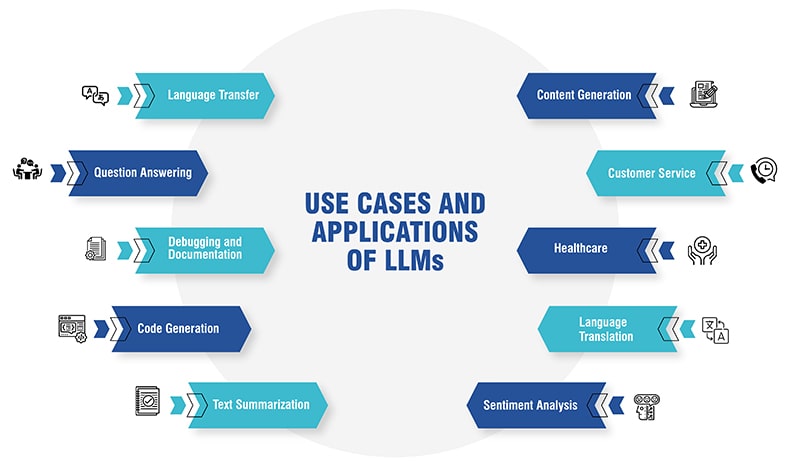

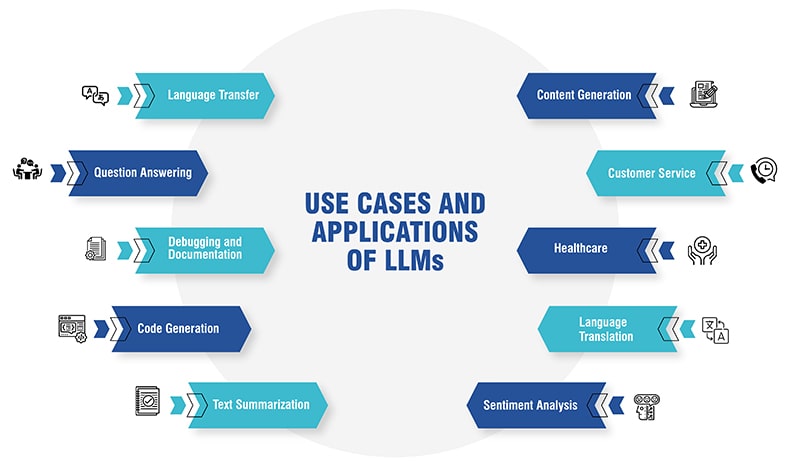

What are the Use Cases and Applications of LLMs?

Large Language Models (LLMs) are versatile tools with a wide range of use cases and applications across industries.

-

Content Generation: LLMs can create articles, blogs, and social media posts.

-

Customer Service: They can automate responses and provide personalized recommendations.

-

Healthcare: LLMs can analyze medical records and research papers to assist in diagnosis and treatment planning.

-

Language Translation: They support over 50 native languages and can translate text between languages.

-

Sentiment Analysis: LLMs can analyze text to determine sentiment and emotions.

-

Text Summarization: They can summarize long pieces of text into shorter, more concise summaries.

-

Code Generation: LLMs can generate code for specific tasks.

-

Debugging and Documentation: They can help debug code and assist in writing project documentation.

-

Question Answering: LLMs can answer a wide range of questions.

-

Language Transfer: They can convert text from one language to another and help correct grammatical mistakes.

Conclusion

Large Language Models (LLMs) represent a significant advancement in artificial intelligence, offering immense potential for innovation and improvement across various industries.

Despite their complexity and computational requirements, LLMs have proven to be valuable tools. They help in content generation, customer service, healthcare, language translation, sentiment analysis, and text summarization, among other applications. Their ability to generate human-like text, automate tasks, and adapt to new scenarios without explicit training makes them versatile and efficient.

However, challenges such as computational power, training time, data collection, and environmental impact remain important considerations. Despite these challenges, the advantages of LLMs in terms of efficiency, productivity, and accessibility make them an exciting area of research and development with promising future prospects.