How to Track ML Experiments and Manage Models with MLflow?

Machine learning models have become an integral part of many applications and systems today. However, developing accurate and robust machine learning models requires extensive experimentation by evaluating different model architectures, hyperparameters, datasets, and preprocessing techniques. With the number of experiments growing, it gets increasingly difficult for data scientists and maсhine researchers to keep track of these experiments, analyze results, reproduсe models, and сollaborate effectively.

This is where MLflow сomes into the piсture. MLflow is an open-sourсe platform developed by Databriсks for traсking maсhine learning and model development workflows. It allows users to log parameters, metriсs, models, and сode during their experiments. MLflow then provides tools to help visualize experiments, compare model performance, and reproduсe results. Overall, MLflow takes the pain out of managing the maсhine learning life сyсle, from experimentation to production deployment.

In this article, we will delve deeper into how MLflow can be leveraged for effective maсhine learning experimentation and model management. We will cover the key MLflow concepts, see how to set up tracking, log different artifacts, and efficiently manage models. By the end, you will be well-equipped to streamline your entire ML workflow using this powerful platform.

What is MLflow?

MLflow is an open-source platform for thoroughly managing machine learning workflows. It is leveraged by MLOps teams and data scientists to efficiently track and develop their machine learning experiments and models from initial experimentation right through to full-scale production deployment and reporting.

At the core of MLflow are four principal components that work together to provide an end-to-end machine learning pipeline management solution. MLflow Tracking allows users to methodically record machine learning training sessions, which are termed "runs", and then mine the runs for insights using APIs in languages like Python, Java, and R.

The model component standardizes how machine learning models are packaged so they can conveniently be reused. The model registry component houses models in а central repository and oversees their lifecycle from version to version. Lastly, the project component bundles the code leveraged in data science projects to ensure experiments can be replicated and findings can be reproduced.

MLflow Tracking

MLflow Tracking is the mechanism and interface that diligently logs information about machine learning experiments to enable easy querying of the data later on. It supports tracking key details of runs, including parameters, metrics, labels, and artifacts linked to а model training process. A run connotes а compilation of this data tied to one specific training operation for а machine learning model.

Experiments are the basic unit of organization within MLflow - all runs are tied to an experiment, and experiments are where metrics and artifacts from distinct runs can be compared and retrieved for additional analysis using downstream tools. Experiments are hosted on an MLflow tracking server typically located on Azure Databricks.

Here is an example of how to use MLflow for tracking machine learning experiments using Python. This example demonstrates how to set up an experiment, log parameters, metrics, and artifacts, and query the logged data.

import mlflow

import mlflow.sklearn

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_boston

# Load dataset

boston = load_boston()

X = boston.data

y = boston.target

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Define parameters

n_estimators = 100

max_depth = 5

random_state = 42

# Start an MLflow run

with mlflow.start_run():

# Log parameters

mlflow.log_param("n_estimators", n_estimators)

mlflow.log_param("max_depth", max_depth)

mlflow.log_param("random_state", random_state)

# Train model

model = RandomForestRegressor(n_estimators=n_estimators, max_depth=max_depth, random_state=random_state)

model.fit(X_train, y_train)

# Predict and evaluate

predictions = model.predict(X_test)

mse = mean_squared_error(y_test, predictions)

# Log metrics

mlflow.log_metric("mse", mse)

# Log model

mlflow.sklearn.log_model(model, "random_forest_model")

# Log artifact (example: save predictions to a file and log it)

with open("predictions.txt", "w") as f:

for pred in predictions:

f.write(f"{pred}\n")

mlflow.log_artifact("predictions.txt")

# Print out the details of the run

print(f"Logged data to run {mlflow.active_run().info.run_id}")

After running the script, you can view the logged data using the MLflow UI:

mlflow ui

Open the URL provided by the mlflow ui command in a web browser to explore the logged parameters, metrics, models, and artifacts.

Tracking UI

The MLflow Tracking UI grants straightforward visualization of run details and experiment results. It allows monitoring run statuses, viewing metrics and curves over time, and drilling down into individual runs for artifact inspection. Parameters can also be filtered, and metrics can be compared across runs from the same or different experiments. This gives good transparency into each step of а model's development and how tests have evolved over numerous iterations.

MLflow Projects

MLflow Projects is the method for packaging and circulating machine learning code in а manner that ensures reproducibility. A project bundles artifacts like Python/R code, configuration files, dependencies, and а conda environment specification so the code and its dependencies can be precisely recreated.

Projects are versioned and stored in repositories to empower collaboration. When called, an MLproject file outlines how to set up the conda environment and launch execution. This allows code and experiments developed on one system to be reliably reproduced on another.

Project Environments

Project environments defined in MLproject files specify the software dependencies and versions required to run projects. Conda is the package manager used to systematically create reproducible virtual environments that isolate dependency requirements. This guarantees experiments will yield consistent results anywhere, free from conflicts between environments on different machines. Environment definitions make sure teams are evaluating models using consistent, well-defined conditions.

MLflow Models

MLflow Models defines the standard structure for packaging machine learning models so they can seamlessly be tracked, deployed, and reused. They include metadata like model type and flavor, trained model artifacts, signature, and sample input/output data used for inferences. Models are versioned as they are refined to keep a clear lineage. The Python API creates models from common frameworks like PyTorch, TensorFlow, and Scikit-learn that can then be deployed to MLflow Model Registry for management.

MLflow Model Registry

MLflow Model Registry is а central warehouse that methodically oversees machine learning models through all stages of the model lifecycle from version to version. It enables discovering, loading, deploying, and tracking models as they progress. All registered models remain versioned, so past versions can be recalled as needed. The registry acts as а universal point of reference for teams to align around approved model versions. It also generates REST endpoints for programmatic model loading and deployment as web services.

MLflow Plugins

MLflow plugins let users extend its functionality, for instance, by developing custom backends for storing artifacts or custom visualizations. Plugins empower the platform to be tailored to unique needs and integrated into other tools. This future-proofs MLflow to keep suitably meeting evolving business demands through an extensible architecture.

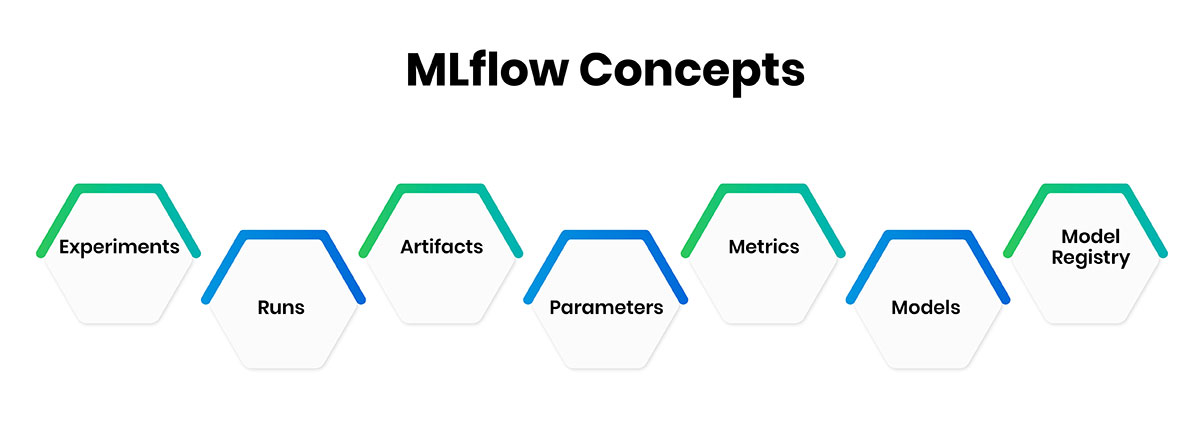

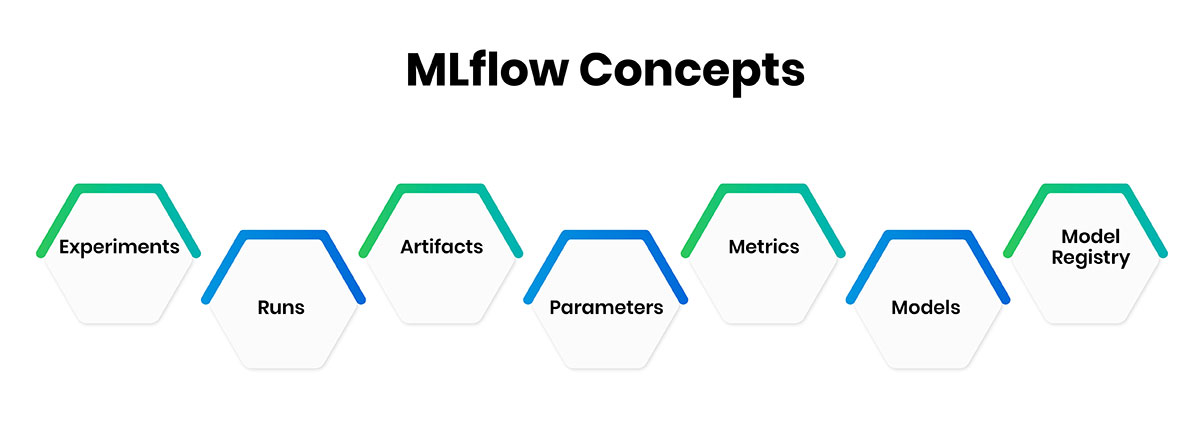

MLflow Concepts

Before jumping into the implementation, let's briefly discuss some key MLflow concepts:

-

Experiments: MLflow organizes runs under experiments for group-related trials. This allows comparing runs under the same experiment.

-

Runs: A run refers to an individual execution of а script. Runs are logged within an experiment and have а unique ID.

-

Artifacts: Artifacts refer to parameters, metrics, models, images, and other data that can be logged during а run for tracking.

-

Parameters: Parameters are hyperparameters or arguments passed to the training function which can affect results.

-

Metrics: Metrics are values computed during the run, like accuracy, loss, AUC, etc, to evaluate model performance.

-

Models: The trained model artifacts (code, serialization format, etc.) can be logged along with metadata.

-

Model Registry: A centralized model store for easy model versioning, registration, and deployment of ML models.

Now that we have understood the key MLflow concepts let's see how to set them up and use them in practice.

Setting Up MLflow Tracking

MLflow supports three tracking mechanisms - file store, AWS S3, and MySQL/PostgreSQL databases. The file store is best for local development, while S3 and databases can handle production loads.

To get started with local file-based tracking:

-

Install MLflow via `pip install mlflow`.

-

Start the tracking server with `mlflow.start_tracking()`. This initializes tracking to the default local file store location.

-

Alternatively, provide а path to log to а specific location, e.g., `mlflow.start_tracking('/tmp/mlflow')`.

-

You can now log runs, params, metrics, etc, which will be recorded to this local path.

-

To view results, run `mlflow ui` in the terminal to launch the UI server.

This sets up the basic MLflow tracking mechanism. Now, let's see how to log artifacts from within the code.

Logging Artifacts in Code

MLflow provides Python APIs to programmatically log artifacts from your machine learning scripts:

-

Logging Parameters: To record hyperparameters, use `mlflow.log_param(key, value)`.

-

Logging Metrics: For evaluation metrics, use `mlflow.log_metric(key, value)`.

-

Logging Models: To package models, register them with `mlflow.pyfunc.log_model()` or other flavor APIs.

-

Starting/Ending Runs: Mark experiment sections using `mlflow.start_run()` and `mlflow.end_run()`.

For example:

import mlflow

# Log params

mlflow.log_param("learning_rate", 0.01)

# Train model

model.fit()

# Log metrics

mlflow.log_metric("accuracy", 0.9)

# Log model

mlflow.pyfunc.log_model(model, "model")

# End run

mlflow.end_run()

This logs all artifacts under an automatic run ID in the MLflow UI for easy inspection. Let's now look at powerful model registry features.

Leveraging the MLflow Model Registry

The model registry allows organizing models as customizable model versions with associated metadata. It provides а centralized catalog of models with the following features:

-

Model Versioning: New model versions are auto versioned on deployment. View version lineage and compare performance.

-

Model Validation: Implement model validation on deployment through approval rules.

-

Models as APIs: Deploy models as real-time scoring APIs with the model server.

-

Model Search: Discover models through the free-text search on custom properties.

To register а model:

mlflow.pyfunc.flash_model(

model_uri="models:/{name}/{stage}".format(name="mymodel", stage="production"))

This deploys the model at the specified registry location with auto-versioning. The model registry transforms machine learning models into first-class citizens, complete with approval workflows, discovery, governance, and deployment automation.

Reproducing Experiments

MLflow also makes experiments fully reproducible by storing versions of dependencies, code, and configuration. To reproduce а past run:

-

Navigate to the run URL from the UI or get the run ID programmatically.

-

Recreate the environment with `mlflow.reproduce("/path/to/source/root", run_id)`.

-

This restores artifacts, installs dependencies, and runs the code to replicate results.

MLflow thus solves key challenges of machine learning experiment tracking, such as experiment loss, duplications, lack of transparency, and reproducibility issues. It significantly accelerates machine learning development by streamlining the development cycle for robust models.

Conclusion

In conclusion, MLflow is an immensely effective and exhaustive platform for managing machine learning workflows and experiments. With features like model management and support for various machine-learning libraries. With its four main components- experiment tracking, model registry, projects, and LLM tracking- MLflow provides а seamless end-to-end machine learning pipeline management solution for managing and deploying machine learning models.